aNewDomain — Will phase-change memory solutions for computers and robots enable a great leap forward in the evolution of artificial intelligence? Will it lead us into a future where thinking machines can become human-like enough to take on many of today’s more boring and dangerous human tasks, while fulfilling the role as our personal assistants?

Phase-Change Memory to Replace NAND

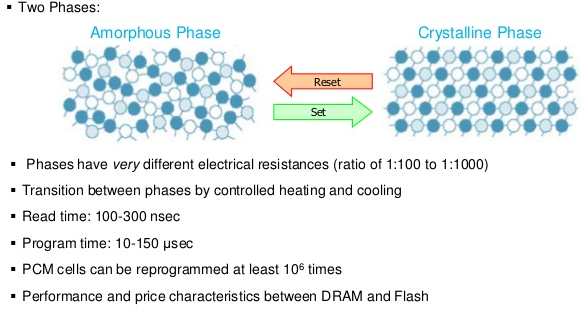

Phase-change memory is being actively pursued to ultimately replace today’s NAND memory (i.e., flash memory). At the time of this writing, NAND is the most advanced computer memory system that we have. However, for our ever-increasing AI needs and demands, NAND is coming up against limits.

Image Courtesy of IBM and ExtremeTech

According to Xilin Zhou of the Shanghai Institute of Microsystem and Information Technology at the Chinese Academy of Sciences:

-

Flash memory is limited in its storage density when devices get smaller than 20 nanometers. Phase-change memory device can be less than 10 nanometers — allowing more memory to be squeezed into tinier spaces.

-

Data can be written into phase-change memories very quickly.

-

Phase-change memory devices would be relatively inexpensive to produce.”

A spokesman for the U.S. computer memory supplier Memory Data Systems says,

It’s truly exciting news that researchers believe they can mimic the human brain’s analytical capabilities using phase-change memory chips. The development is certainly not advanced enough to lead to independent AI-type machines just yet, but computer memory solutions are growing more sophisticated every day.”

Advances in AI

The advancement of artificial intelligence that mimics human brains is ineffably meaningful to the advancement of computers and robots, and thus of mankind’s future. AI’s evolution has been an exciting, and sometimes frustrating, one.

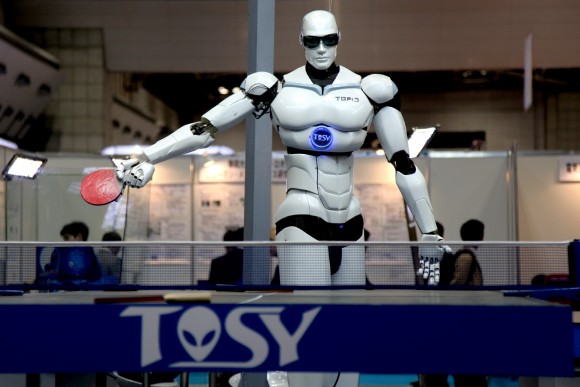

By Humanrobo (Own work) [CC BY-SA 3.0], via Wikimedia Commons

Dharmendra Modha, a senior researcher at IBM Research in Almaden, California and the leader of one of two groups that have built computer chips with a basic architecture copied from the mammalian brain under the aegis of the $100 million Synapse program, says “Modern computers are inherited from calculators, good for crunching numbers. Brains evolved in the real world.”

This insight is shared by Carver Mead. Today Mead is a professor at the California Institute of Technology and one of the fathers of modern computing. He first became famous in the computing industry by being part of the development team for computer chip-driven “very large-scale integration” or VLSI. VLSI allowed manufacturers to design and produce much more complex microprocessors.

Mead said, “The more I thought about it, the more it felt awkward,” in regard to the huge efficiency and cooling problems associated with increased heat waste from building increasingly complex chips. So he began dreaming of parallel processing computers. However, “the only examples we had of a massively parallel thing were in the brains of animals.”

While many people are excited about and looking forward to increasing the power of artificial intelligence and making it more human-like, many others feel a natural antipathy toward the endeavor and fear a time when our machines may replace us.

Is the man-and-machine future going to take us into the Star Wars galaxy, or shall it bring about the era that led to the Butlerian Jihad?

I suspect that’s entirely up to us.

For aNewDomain, I’m Brant David.

No fear needed, its what is done with tech that needs to be questioned, not the tech itself.