aNewDomain — Remember Google Glass? I do. I fantasized about the potential of Glass. The use of augmented reality (AR) seemed endless and a treat for consumers — me. I could play some Ingress or my favorite Android game, call a friend with my eye. It was going to be cool. But then society and media decided that Glass and other headsets looked stupid. It had no “cool” factor, you see.

Now, I know the above picture screams out “Cool!” So cool in fact we will show it twice. But let’s forget this picture’s cool factor for a minute. I had the opportunity to speak with Fujitsu at MWC 2015 as it showed off its version of augmented reality. What’s the difference between the reality of what Fujitsu is doing and my own hopes and dreams of augmented reality?

Fujitsu’s real-world application is the difference.

Our world is hung up on mass production and the capacity for profit. With mass production, we the consumers are often subjected to poor quality assurance in the products we love. Order a new keyboard and the “T” key pops off in a week. Grab the latest Samsung Galaxy smartphone and the home button constantly sticks. It’s not a great experience considering all of the money we dump into our devices.

Yes, Microsoft showed off Hololens recently, but what are the use cases for this? Where do we use it in the “real world?” Is it even useful? Fujitsu demonstrated to me how its augmented reality can aid in mass production and manufacturing with its Software Interstage Processing Server, all by integrating a head-mounted camera and LCD, a couple of Android mobile devices and QR codes. A real-world scenario.

All image credits: Ant Pruitt

Let’s build something

Hypothetically, let’s take a look at a factory producing automobile parts. Each factory worker is responsible for a specific component of production as well as installation. Ideally, machines extrude and press metal into specified shapes, which creates the automobile components. Sometimes the parts may be 1/8” inch too small or possess other structural degradation. There are times the human eye won’t notice the structural issues of the components produced.

Fujitsu steps in here — the AR camera and software built into its device can easily scan the components for quality assurance. If everything checks out with the parts, the employee confirms via the network-connected Android device, simply by clicking “Ok.”

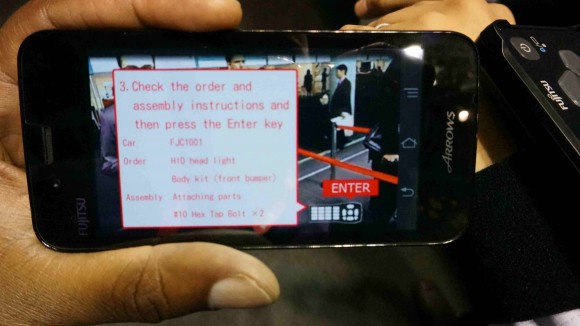

This confirmation data is sent back to the central processing server, which updates the production status of the component. The data is viewed on the employee’s mobile device as well as other interested computing devices, such as the supervisor’s mobile devices. Think of Iron Man and Jarvis. The interface you see there shows how the camera and scanners interact with all of the components at hand. The Fujitsu software (just a step behind Tony Starks’!) is heightened by one more additional layer of quality assurance in which the handheld device forces the user to confirm the step is completed correctly, as seen below.

I couldn’t believe what I saw as this AR device processed, scanned and implemented various parts. I was handed another Android mobile device that used the screen cast feature, which showed me exactly what the Fujitsu rep saw on his heads-up display (HUD). As he scanned bolts and QR codes, my mobile device had the same overlay and confirmation prompts.

It was amazing to see this in action and function seamlessly in real time. I enjoyed watching the interstage system process potential user errors and exceptions, such as grabbing the wrong part from the stock pile to complete the job. As you’re holding an incorrect part, the mobile device (or HUD) will warn you and not let you proceed to the next step. Notice the QR codes and overlay.

The Future of AR

For this demonstration, I was shown how the part for a hypothetical car engine is installed — by hand. The software instructs the user on how to properly install the component with a video aid by way of the HUD or mobile device. As I watched this video aid, I thought I was watching an online tutorial. It was that direct and simple.

My experience at the Fujitsu booth blew me away. I truly could not believe what I just saw. I’ve had my own assumptions about AR and those, for the most part, were limited. I thought it would remain technology that’s too expensive for people to use in a realistic context, like gaming or social media. But Fujitsu showed me real-world and real-time use cases for AR.

Technology and innovation are great, but does it matter if it’s only an innovation for its own sake? Why not put these incredible innovations to use for “regular” people, not just the geek culture? Having the Software Interstage Processing Server in place to make life easier for tasks like mass manufacturing is a really good start.

What’s next for augmented reality? What uses can we find for it in our humble abodes? How about using AR in the kitchen? I can imagine a HUD that scans my oven and ribeye steak to make sure the temperature doesn’t go a degree over medium rare. I can even visualize scanning the refrigerator with the HUD. Not for inventory, because anyone with an eyeball can see if they’re out of milk or eggs, but what about the mystery leftovers from a few days ago? Your HUD can scan the leftovers to detect spoilage and warn you if the food is no longer fresh, advising you sagely that the item should go into the compost bin.

There are, in short, so many possibilities for augmented reality. What do you think are some real-world use cases of AR? What implementation of AR do you see as a game changer, something that’s really going to shake up the world as we know it? Because I saw a small glimpse of the future with Fujitsu, and I can’t wait.

For aNewDomain, I’m Ant Pruitt.

Ant — can you compare the Fujitsu device to the Hololens?

I think they’re are very similar at glance, but the thing is, I saw this one in action. I saw the direct connection to scanning objects and those objects being verified by a back end. Very cool.

Thanks for reading, Mr. Press!

-RAP, II

Thanks for writing!