aNewDomain — Cornell University researchers announced this week that they’ve made some major progress in their attempt to build a smart, super-sensitive robotic “soft hand.”

— Cornell University researchers announced this week that they’ve made some major progress in their attempt to build a smart, super-sensitive robotic “soft hand.”

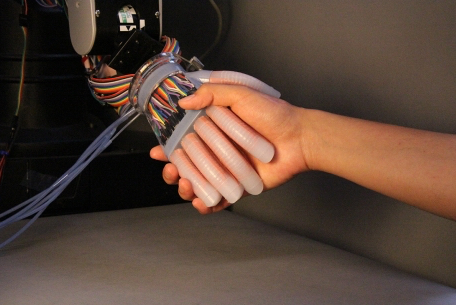

The resulting prosthetic hand technology, according to a research paper they published in a new journal, Science Robotics. comes closer than any robotic hand ever has to the real thing, they say, adding that the soft hand even begins to mimic the finer aspects of a human’s sense of touch, they add.

Scroll below that to find out how their so-called Gentle Bot technology works, what optical waveguides have to do with it and what commercial, industrial and entertainment uses will likely be first to make use of a human-feeling, super-responsive robotic hand.

But first, check out Cornell researchers’ demonstration video of the Gentle Bot-enabled soft hand. It is wild.

Now, scientists have long envisioned and understood the need for prosthetic hands and robotic AI-controlled hands that had the subtle, fine sense of touch of a human, but the cost and difficulty involved in developing them was too exorbitant.

West World, in other words, was all but unreachable.

But then Cornell researcher Robert Shepherd at Cornell’s Organic Robotics Lab decided to approach the challenge in a whole new way. He and his team decided to ditch what they already knew about building prosthetic, robotic hands and start from scratch, foregoing the bulky, mechanical rigid parts and motors that typically go into high-end prosthetics.

Instead, Shepherd and his team set out to build a smart, soft robotic hand based around optical technology, which they believed could look, work and operate a lot more like a human hand.

Instead, Shepherd and his team set out to build a smart, soft robotic hand based around optical technology, which they believed could look, work and operate a lot more like a human hand.

Optical waveguide technology — fiber optics are an example of a waveguide — are the game-changing component in the Gentle Bot innovation they describe.

Because waveguides use light instead of electrical sensors to communicate, soft robotic hands based on them can be less bulky.

The Gentle Bot design embeds sinewy, flexible waveguides inside the robot hand, to the end of its fingertips. This allows those components to move and flex along with those fingers, in a way that mimics human anatomy more than any artificial limb, prosthesis or robot has to date.

“Most robots today have sensors on the outside of the body that detect things from the surface,” says Huichan Zhao, the Cornell doctoral candidate who authored the research paper, which I embedded below. As a result, the soft robot hand the team designed is essentially “innervated,” she added.

“They can actually detect forces being transmitted through the thickness of the robot, a lot like we and all organisms do when we feel pain,” said Zhao, adding:

“Our human hand is not functioning using motors to drive each of the joints; our human hand is soft with a lot of sensors … on the surface and inside the hand … Soft robotic (technology) provides a chance to make a soft hand that is more close to a human hand.”

The nerve-like wave guides, long and sinewy as they are, are powerful, too, say the researchers. They can measure elongation, curvature and pressure, which are key capabilities if you are trying to mimic human touch, the researchers said.

And here’s the secret sauce: Manufacturing waveguides, which once required expensive fabrication processes and facilities to make them, now can be custom printed. Thanks to the advent of affordable, soft lithography, even a university researcher laboring under a tight academic budget can do it.

Touch of the future

The Gentle Bot soft hand, by the way, isn’t just some tomato-touching one trick pony.

It can hold a mug of coffee without spilling it — and shake your hand without feeling too, well, robotic. It also can tell, using just a quick swipe of a single, soft robotic finger, whether it is touching a sponge, acrylic or silicon rubber.

But the technology isn’t perfect, of course. Sure, it can tell which of three tomatoes is ripest, but it has trouble telling the difference between a hard, not yet ripe tomato and, say, acrylic, says Zhao.

Improving the subtlety and smarts behind this robotic touch system is a main focus now for the researchers. That will likely mean the inclusion of more sophisticated waveguide sensors, machine learning algorithms and improvements to components like the hand’s soft actuators. Once those actuators can detect a wider range of pressure, detecting the difference between acrylic and vegetable gets a good bit easier..

Improving the design so it is even cheaper and easier to manufacture is a focus, too. One goal is making sure components can be fabbed via 3D printing on site, which would allow doctors to create custom fit prosthetic hands, for example.

How it works

But the essentials of this innovation already seem to be in place, say the researchers. That revolves around the use of waveguides as the primary transport system in a robotic hand, the same role the nerves in your fingertips play in your sense of touch.

Like your nerves, these flexible components literally bend and flex whenever the soft hand’s fingers come into contact with something, even slightly. But, because they change how much light is able to pass through, a simple photodiode detects what the light is and communicates what it finds to the host software. It really is as simple as that.

You will find far more detail about how the Gentle Bot hand works in the Cornell paper the researchers published this week, which I’ve embedded in full below.

Scroll below that to read about a few of the edgy applications Gentle Bot technology will find in artificial intelligence, virtual reality, augmented reality and other uses, and the trippy sci-fi vision the Cornell researchers have for Gentle Bot in the long term.

Robotic Soft Hand Prosthetic, Research Paper by Gina Smith on Scribd

What’s next for Gentle Bot?

You don’t have to read much of the above research paper to come up with all kinds of imaginative and even edgy potential applications for ultra-sensitive robotic hands that look, feel and work like a real human hand, or anything close.

As tech writers like me have been fond of saying for years now, long before an innovation makes commercial sense, it’ll debut for military uses — usually in the US or Israel. For what it’s worth, the Cornell research paper, above, notes the project is funded by the Air Force Office of Scientific Research (AFOSR).

And after the military, so the old saw goes, comes sex. This is a sector that won’t have to think too hard to find all manner of profitable uses for super-sensitive robotic hands.It’s just as easy, though, to imagine Gentle Bot tech applications showing up in all manner of exciting AI, VR and AR-related entertainment, apps and games, too.Medical prosthetics are certainly one of the first commercial applications the creators of Gentle Bot are eyeing.

In their research report, the team writes that “our experiments also demonstrate the high resolution of our sensors … as well as their compatibility with soft robotic systems …The application we explored in this work could potentially provide sophisticated grasping and sensory feedback for advanced, custom prosthetics at low cost.”An early debut in manufacturing and assembly lines seems to be definitely in the cards, too, judging on the theme of their demonstration video and write up. After all, many big food packing plants already use primitive soft robotic grips to sort and pack fragile food items.

All these commercial and medical uses aside, though, the researchers behind Gentle Bot say they have far bigger, loftier goals in mind here.

For them the sky, quite literally, is the limit: Gentle Bot’s creators invision entire, innervated bio-inspired robots that will someday explore the furthest reaches of Earth and even the universe.

Stay tuned. We’ll be following this tech for you as it progresses over the coming year and beyond.

For aNewDomain AI News, I’m Gina Smith.

Credits: Cover image of the Gentle Bot soft prosthetic hand by: Huichan Zhao, Organic Robotics Lab, Cornell University. All Rights Reserved. Inside image of Cornell Gentle Bot researcher Shuo Li shaking hands with the soft hand by: Huichan Zhao, All Rights Reserved.