aNewDomain — Over the past year, big names in technology and science like Elon Musk and Stephen Hawking have warned that the threat of artificial intelligence (AI) gone bad, a la SkyNet in the “Terminator” franchise, might be more than just science fiction.

Elon Musk reasoned that a $10 million challenge and a different approach might be a moral game changer. “Here are all these leading AI researchers saying that AI safety is important,” Musk said in a statement, referring to an open letter signed by a number of leaders in the field. “I agree with them, so I’m today committing $10M to support research aimed at keeping AI beneficial for humanity.” On Twitter, he wrote that AI could be more dangerous than nukes.

Stanford University’s One Hundred Year Study of AI echoes this concern:

We could one day lose control of AI systems via the rise of superintelligences that do not act in accordance with human wishes — and that such powerful systems would threaten humanity. Are such dystopic outcomes possible? If so, how might these situations arise? … What kind of investments in research should be made to better understand and to address the possibility of the rise of a dangerous superintelligence or the occurrence of an intelligence explosion?

Screenshot by David Michaelis courtesy of Collider

How did we get to this point?

We in the 21st century are enthusiastically re-imagining the world, through many technological innovations, but we have lost an overall perspective concerning the result of our activities. Many of us make technology the solution to every problem we face, giving our algorithms almost God-like attributes. We are ashamed that we are not as perfect as an iPhone. Ashamed that we’re not as perfect as the machinery and gadgets that surround us.

In his critique of technology, Jewish German philosopher Gunther Anders wrote, “We are … incapable of running at the speed of transformation which we ourselves ordain for our products and of catching up with the machines.” Even as we try to catch up through science fiction books and films, which portray the results of techno-innovation, it all seems to be classified as entertainment and not as a real warning. Our limited capabilities to emotionally and intellectually bridge between scientific or technological imagination and moral imagination are a daily challenge. Innovative advances are growing exponentially and overwhelming our capability of making sense and differentiating the good from the bad. Science fiction stories and films which are very creative have not bridged the gap.

There are millions of human brains working on new innovation, assisted by millions of AI computers which enhance their capabilities for calculation and disruption. Quantum computers are ever faster, but our moral sensibilities are not … moral understanding is part of the slow lane of life, not the fast lane of AI. There are too few of us working to formulate how the moral imagination can be enhanced. I know of no artificial intelligence machine that works with a “moral algorithm.”

A Moral Future?

The U.S. Navy recently invested $6 million in a project to create moral robots which might command “moral drones” that do not shoot at weddings, for example. Without a moral component, these drones could effectively boomerang and turn on us. This is what Musk and Hawking are afraid of, not just Terminator-like dangers but also smart drones turning on their makers.

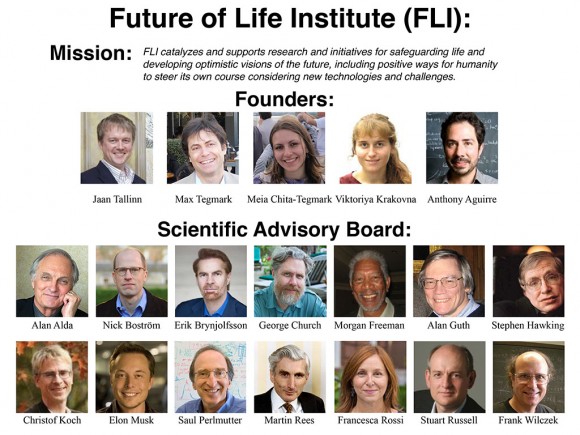

Screenshot by David Michaelis courtesy of Future of Life Institute

The Future of Life Institute, recipients of Musk’s $10 million commitment to keeping AI beneficial to humans, recently shared a brief titled, “Research priorities for robust and beneficial artificial intelligence.” In it, they ask:

Can lethal autonomous weapons be made to comply with humanitarian law? If, as some organizations have suggested, autonomous weapons should be banned, is it possible to develop a precise definition of autonomy for this purpose, and can such a ban practically be enforced? If it is permissible or legal to use lethal autonomous weapons, how should these weapons be integrated into the existing command-and-control structure so that responsibility and liability be distributed, what technical realities and forecasts should inform these questions, and how should “meaningful human control” over weapons be defined? Are autonomous weapons likely to reduce political aversion to conflict, or perhaps result in “accidental” battles or wars? Finally, how can transparency and public discourse best be encouraged on these issues?

Politicians are busy with the “War on Terror” while the real future of our children is being stolen. If we rely on the U.S. Navy to find our moral compass, we have clearly failed.

For aNewDomain, I’m David Michaelis.

Featured Image: © agsandrew / Dollar Photo Club