It was with some amazement that I read a few days ago of Microsoft’s $1B investment into OpenAI to support Artificial Intelligence research and development in the direction of Artificial General Intelligence (AGI).

When I introduced the term AGI into the public discourse 15 years ago, not many people wanted to hear about it. But over the last few years, the pursuit of AGI has moved from obscurity, harsh skepticism and resource starvation to widespread acceptance and non-trivial funding with tremendous speed — a trend strongly resonant with Kurzweilian visions of a coming Singularity.

The speed with which megacorporations are moving to dominate the rapidly growing AGI scene is also impressive and, given the ethically questionable nature of big tech, profoundly worrisome.

For those of us engaged with developing AGI within alternative, less centralized organizational structures, it’s clear: The game is on.

AGI: From the Shadows to Center Stage

The 2005 book my colleague Cassio Pennachin and I edited, titled Artificial General Intelligence and published by Springer, had contributions from a variety of serious researchers, including a former employee (Shane Legg) who went on to co-found Google Deep Mind.

But at that stage, it was incredibly hard to get government research grant money for anything resembling AGI or human-level AI, let alone corporate interest. AGI was something you discussed with AI professors over a beer after work, along with time travel and the possibility of alien life, not something that came up in the department seminar or in an investor presentation.

The first AGI conference I organized, in 2006, had the feeling of 50 maverick visionary engineers and scientists huddled in a room plotting a route toward a future that almost nobody else could see as a viable possibility. Since 2008 we have had AGI conferences annually, at a variety of locations including Google’s campus in Mountain View, Oxford University in the UK, Peking University — and soon, this August in Shenzhen. Participation by speakers and attendees from major corporations and leading research universities is now the norm.

Now in 2019, AGI is not yet here — the Narrow AI revolution we have seen is focused on AIs that are mostly very narrow indeed, capable of carrying out very specific tasks in specific contexts.

But we are seeing some movements in the direction of general intelligence — of AI systems that can display intelligence regarding problems and situations very different from the ones for which they were programmed or trained.

For instance, Google Deep Mind’s AlphaGo only mastered Go — but the successor system AlphaZero can master a variety of different games, without needing specific preparation for each game.

Automated driving systems still need to be customized for each type of car, and heavily customized for each type of vehicle (car, truck, motorcycle, etc.) — but there is active research toward making these systems more generic, more able to use ‘transfer learning’ to generalize what they have learned while driving one type of vehicle to help them better drive other types.

There is nothing resembling a consensus in the AI research community regarding how or when AGI will be achieved. Some believe it could be as soon as 10-20 years off, others think it could be 50-100. But the percentage of AI researchers who think AGI is 100s or 1000s of years off, or will never be achieved at all, is tremendously smaller than it was 30 years ago when I started my R&D career, or even 10 or 5 years ago.

The most popular approach to both narrow AI and AGI is presently “deep neural networks”, a type of AI that very loosely emulates aspects of human brain structure, and has proved dramatically effective at multiple practical tasks including face recognition, image and video analysis, speech-to-text and game playing.

Some leading AI researchers are skeptical that current deep learning technology is the right direction to follow to achieve AGI. I fall into this camp, as does Gary Marcus, (founder of Geometric Intelligence, which was sold to Uber, and Robust.AI), who has expressed his skepticism in carefully-wrought articles and interviews.

While deep learning has garnered deserved attention and funding in recent years, the AI field is much broader and deeper including many other approaches such as probabilistic logic systems, evolutionary learning, nonlinear-dynamical attractor neural networks, and so much more. My own OpenCog and SingularityNET AI projects aim to achieve AGI via connecting together multiple different AI approaches in an integrated learning and reasoning based cognitive architecture.

OpenAI, like Google Deep Mind and Facebook AI Research Lab — all of which are doing AGI-oriented research together with their immediately practical AI technology development — stands firmly in the deep learning camp.

The spate of recent successful commercial applications of deep learning makes the tech a no-brainer investment target for large corporations — which is only natural, large corporations are conservative by nature and there is always more money for an already-proven technology than for the next big thing that is still in a more formative stage. Like Gary Marcus, I suspect that over the next few years, as the limitations of current deep learning tech become clearer, we will see growing corporate interest in a broader variety of AGI approaches.

AGI Researchers — Summoning the Demon?

Along with the rising economic value and practical impact of narrow AI and the increasing push toward AGI R&D, there is increasing concern about the political, economic and broader human implications of AI technology.

There are worries about AI eventually taking over and exterminating, enslaving or imprisoning humanity; and nearer-term worries about AI eliminating most or all human jobs, leaving our socioeconomic systems with a very difficult adaptation process.

Current US presidential candidate Andrew Yang is perhaps the first mainstream politician to take for granted that automation will lead to massive human unemployment; he is proposing to confront this with a Universal Basic Income mechanism, initially $1000/month for every US citizen and growing over time.

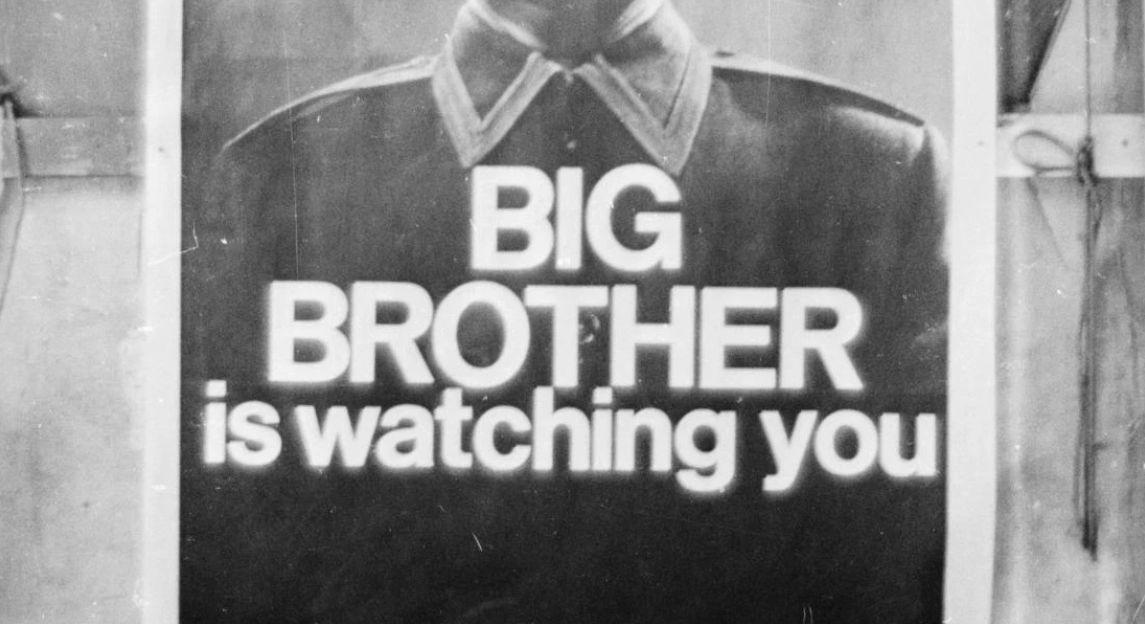

Concern with ethical issues related to big tech companies’ use of AI applied to customer data is also on the rise.

Are Facebook, Google and the like gathering our data, analyzing it with AI, then using the results to manipulate our minds and our lives for their own economic benefit?

The answer is clearly yes. And continuing to do this is the fiduciary duty of their management — this is the winning recipe they have found for exploiting network effects to maximize shareholder value.

OpenAI was originally founded to make progress toward AGI in a manner specifically oriented toward the long-term benefit of humanity. Its origination emanated in part from co-founder and initial co-funder Elon Musk’s worry that “AI researchers are summoning the demon” — they are letting the devilish genie out of the magic bottle, and they think they’ll be able to control him while they exploit his powers, but of course he will elude their grasp and wreak havoc.

A few years after its creation, OpenAI bifurcated into a nonprofit aimed at fostering general progress toward AI for good, and a for-profit aimed at actually building toward AGI technology. The bulk of the company’s engineers went into the for-profit.

Now this for-profit portion of OpenAI has jumped into bed with big tech in a big way — accepting a billion dollars from Microsoft in exchange for an ongoing close partnership including a commitment by OpenAI to exclusively use Microsoft’s Azure cloud technology for a period of time.

OpenAI’s launch received great fanfare for its association with “up to a billion dollars” of funding from Elon Musk, Sam Altman and others. The nature of this soft-commitment of up to a billion dollars was never made quite clear — i.e. how much would be given when and based on what milestones or triggers.

The billion dollars from Microsoft is not quite as wishy-washy but there are some similarities. The funds may be released over a period of time — maybe over as much as 10 years. And some of the funds will return back to Microsoft in the form of paying for Azure cloud usage.

A cynic might see this new partnership as one more example of the role of funky, high-minded startups as recruiting mechanisms for big tech. An idealistic young AI geek joins OpenAI wanting to contribute their talents to the creation of ethical AGI for the benefit of the world — and then winds up working to maximize the competitiveness of Microsoft Azure’s applied deep learning APIs.

Challenging Big Tech with Decentralized Networks

The practical reasons for accepting the strings attached to a billion dollar injection from Microsoft are clear. Cutting-edge AI R&D now requires large amounts of computing power, and leveraging all this compute power requires expert support staff beyond just an AI research team.

Pursuing AGI with one or two orders of magnitude less funding than big tech companies’ AGI teams, puts you at a decided disadvantage.

Of course, if a small and minorly-funded team has a fundamentally different and better technical approach to AGI, it may prevail over its big-tech competitors. But OpenAI’s basic AI technology orientation is about the same as Google Deep Mind, Google Brain, Facebook AI Research, Tencent, Alibaba or Baidu.

They are all exploring variations on the same class of algorithms and architectures. If there’s a race to see how far one can push deep neural nets toward AGI, it’s likely the winner will hail from one of the better-funded teams.

Those of us in the small but rapidly growing decentralized AI space are actively pushing back against the increasing centralization of resources that the Microsoft-ization of OpenAI exemplifies. We believe that the creation of broadly beneficial AGI, and before that broadly beneficial narrow AI applications, is more likely to happen if the underlying fabric of AI learning and reasoning algorithms is decentralized in ownership, control and dynamics. A decentralized control structure will allow a network of AI agents to address a greater variety of needs and problems, and to leverage contributions from a greater variety of people and human organizations.

Centralized organizations end up needing to focus narrowly in order to achieve efficiency, because of the information-processing restrictions of the people and sub-organizations at the top of the pyramid. Decentralized organizations can be more heterogeneous.

This is one reason why Linux, a decentralized operating-systems community, has penetrated both supercomputer and embedded-device markets much more effectively than its proprietary Windows and MacOS competitors.

The Linux community could explore these application areas without having to deal with the cognitive processing constraints of a centralized management structure, or the creativity-inhibiting cultural practices that bog down every large organization.

Decentralized organizations can also roll products out flexibly to markets that don’t offer obvious high returns on investment. This is one reason why Linux-powered smartphones are dominating the developing world. The open-source nature of Linux made it a cost-effective choice for low-cost mobile phone developers like the Chinese Xiaomi, the African Tecno and all the rest.

Large corporations have a valuable role to play in the modern technology ecosystem — but this role shouldn’t be one of hiring all the AI developers, ingesting all the data about everyone, and then controlling all the AI. In the case of Linux, large corporations utilizing and contributing to the Linux codebase have played — and continue to play — a critical role in advancing Linux as a general-purpose open source OS.

However, these corporations don’t own Linux. IBM recently bought Red Hat for $34B, but what it bought was a system of applications that make it easier for corporations to leverage the underlying open source Linux codebase for their needs.

In my own corner of the AI universe, we have chosen to divide up our enterprises in a different way from OpenAI.

We have the SingularityNET platform, which is fundamentally decentralized, leveraging blockchain technology to ensure that the basic AI fabric is controlled by everyone and no one — i.e. controlled democratically by all the participants.

Decentralized control of processing and data is critically important in the AI ecosystem, because when getting real use out of code requires a lot of data and processing power, open source is not good enough.

Most AI algorithms are embodied in open source code sitting in GitHub or other open code repositories, but without masses of training data and a lot of funds for server time, it’s hard to make use of this code. Decentralized control of data and processing, as blockchain allows, goes a step beyond open source and opens up the training and utilization of AI tools.

We also have OpenCog, an open source project aimed at achieving AGI via integrating multiple AI paradigms — probabilistic logic, evolutionary learning, attractor neural networks, deep neural networks and more — in a single software framework centered on a sophisticated knowledge graph.

And then we have my own Singularity Studio, a for-profit company creating commercial software products to be licensed by large enterprises.

In the operating-systems analogy, SingularityNET and OpenCog are like Linux and Singularity Studio is like Red Hat. This is very different from what OpenAI has done — in effect, they have put their core AI development into the Red Hat portion.

I believe this is fundamentally contradictory to the ideals with which OpenAI was formulated. I have no direct knowledge of the matter, but I speculate it may be that Elon Musk realizes this, and that this may be why he minimized his role with OpenAI when they made their corporate reorganization.

I believe the leadership of Microsoft is genuinely ethically oriented, and wants to see AI developed for the good of all humanity. But I also believe that their primary goal is to maximize shareholder value.

They might well turn down a highly lucrative chance to build killer robots — although they are ongoingly cooperating with US military and intelligence, like Amazon and most likely all the big tech companies (though some are more explicit about it than others).

But the real risk is in the large number of small decisions they will make, about which applications to pursue and which to back-burner, motivated by profit as the primary motive and broad human benefit as a meaningful but secondary consideration.

Pursuit of financial profit can be a highly beneficial thing, but in an AI context, I would like to see the profit motive driving the application layer, while the core AI layer remains decentralized and largely open source. There are of course risks here, because there are bad actors in the world who will use their insight into the open source code and their ability to contribute to governance of the decentralized networks to do bad things. However, I believe humanity is more good than evil, and I believe there is more good than evil to be derived from openness and decentralization of AI.

It is a well-known fact that, among humans, power corrupts. This fact dooms centralized approaches to management of fundamental AI, and means that giving power over humanity’s first AGI to any single corporation or government is likely to yield ill results.

Now that the world is finally taking AGI seriously, the world should also start waking up to the profound undesirability of centralized human power structures controlling the transition from the narrow AI revolution into the AGI revolution.

There are alternatives to the centralized path to AGI, but there are also many challenges involving bringing these alternatives to dominance, and the OpenAI-Microsoft pairing illustrates these challenges.

Getting a billion-dollar commitment from Microsoft surely was not easy, but creating a decentralized AI, data and processing ecosystem is most likely a dramatically more difficult task.

But that’s exactly what humanity’s situation now calls for, nothing less.

For aNewDomain, I’m Ben Goertzel.

Cover image: United Archives