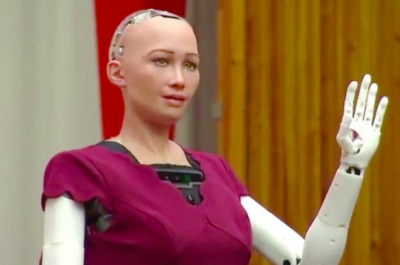

aNewDomain — In the media and academic circles, our humanoid robot Sophia continues to generate a bunch of controversy

aNewDomain — In the media and academic circles, our humanoid robot Sophia continues to generate a bunch of controversy

More and more, people are asking about whether Sophia’s creators have a responsibility correct the confusions of people who assume Sophia has human-level general intelligence even though she doesn’t yet?

Is it enough just to post clear information about what Sophia is and isn’t online? Do we at Hanson have a moral responsibility to make sure the media doesn’t make Sophia into something she isn’t? Should we act even more aggressively to clear up people’s misconceptions?

As a transhumanist, I see these as somewhat peripheral transitional questions. To me, they’re interesting only during a relatively short period of time before artificial general intelligences (AGIs) become massively superhuman in intelligence and capability.

I am far more intrigued by the idea of Sophia as a platform for general intelligence R&D. and as a way of bringing beneficial AGI to masses of humanity — once Sophia or similar robots are in scalable commercial production, that is.

I am far more intrigued by the idea of Sophia as a platform for general intelligence R&D. and as a way of bringing beneficial AGI to masses of humanity — once Sophia or similar robots are in scalable commercial production, that is.

But people keep asking me about this stuff.

Late last year, after the Saudi Arabian government decided to offer Sophia honorary citizenship, the comments and inquiries ratcheted up. In response, I wrote an article in H+ Magazine summarizing the software underlying Sophia as it existed at that point.

I also wanted to address a bunch of these other Sophia-related issues that seem to drive media attention and concern.

One thing I describe there is the three different control systems we’ve historically used to operate Sophia:

- a purely script-based “timeline editor,” which is used for preprogrammed speeches, and occasionally for media interactions that come with pre-specified questions;

- a “sophisticated chat-bot” — that chooses from a large palette of templatized responses based on context and a limited level of understanding (and that also sometimes gives a response grabbed from an online resource, or generated stochastically).

- OpenCog, a sophisticated cognitive architecture created with AGI in mind. It’s still in R&D, but it is already being used for practical value in some domains such as biomedical informatics, see Mozi Health and a bunch of SingularityNET applications to be rolled out this fall.

In a recent CNBC segment, I discussed those three control systems in detail. Disclosure: I look pretty ragged there, I did that video-interview at 1 a.m. via Skype from my home here in Hong Kong, slouched over in my desk chair half-asleep.

Article continues below the fold.

Now, most public appearances of Sophia utilize just the first two systems. Sophia’s creator David Hanson and I tend not to opt for the script-based approach, preferring instead to interact with Sophia publicly in a mode where we can’t predict what she’s going to say next (i.e. 2 or 3 above).

OpenCog will play into Sophia more and more in coming weeks and months. But you already can see e examples of Hanson Robots controlled using OpenCog. Here, for instance, is Sophia having some funky OpenCog-driven dialogue at the Artificial General Intelligence 2016 conference, largely using stochastic models trained on Philip K. Dick’s novels and then integrated through OpenCog’s dialogue system

And here’s Hanson Robotics’ Han robot, Sophia’s brother, doing some basic syllogistic logical reasoning using OpenCog‘s PLN (Probabilistic Logic Networks) subsystem.

The logic involved here is trivial, this was mostly an early exercise in systems integration. I miss working with Han, he had a certain roguish charm; hopefully we can start using him more again later this year!

Much of that H+ Magazine article is still accurate regarding the state of play today. However, there has also been some progress since then.

For instance, in the original “Loving AI” pilot study (and see a sample video of a session from that study here) we did last fall, exploring the use of Sophia as a meditation guide for humans, we used a relatively simple chat-bot type control script (which worked fine given the relatively narrow nature of what Sophia needed to do in those trials).

For the next, larger round of studies regarding Sophia’s use as a meditation guide — currently underway at Sofia University in Palo Alto — we are using OpenCog as the control system.

This is frankly not a highly sophisticated use of OpenCog, but using OpenCog here allowed us to interoperate perception, action and language more flexibly than was possible in the control system we used for the pilot.

As of the last few months, we are finally (after years of effort on multiple parts of the problem) able to use the OpenCog system as a stable, real-time control system for Sophia and the other human-scale Hanson robots.

In the Hanson AI Labs component of Hanson Robotics (formerly known as “MindCloud”), working closely with the SingularityNET AI team, we are crafting a “Hanson AI” robot control framework that incorporates OpenCog as a central control architecture, with deep neural networks and other tools assisting as needed in order to achieve sophisticated, whole-organism social and emotional humanoid robotics.

During the next year we will be progressively incorporating more and more of OpenCog’s learning and reasoning algorithms into this “Hanson AI” framework, along with various AI agents running on the SingularityNET decentralized AI-meets-blockchain framework.

Along with more sophisticated use of PLN, and better modeling of human-like emotional dynamics and their impact on cognition, we will also be incorporating cognition-driven stochastic language generation, using language models inferred by our novel unsupervised grammar induction algorithms. And so much more.

I expect that Sophia and the other Hanson robots will continue to generate some controversy — along with widespread passion and excitement.

But I also expect the nature of the controversy, passion and excitement to change quite a lot during the next couple years, as these wonderful R&D platforms help propel the Hanson-Robotics/OpenCog/SingularityNET research teams toward general intelligence.

The smarter these AIs and robots get, the more controversial things are likely to get — but this is also where the greatest benefit for humans and other sentient beings is going to lie.

For aNewDomain, I’m Ben Goertzel.

Ed: An earlier version of this article ran on Ben Goertzel’s personal blog. Check it out here.

Disclosure: The edit director of this site, Gina Smith, is my colleague and has a day job running editorial for Hanson. She was not involved in the writing of this article, nor is this site profiting financially from Hanson or the publication of this op ed.