aNewDomain.net— An international team of scientists, mathematicians and philosophers at Oxford University’s Future of Humanity Institute did a geeky deep dive into what is most likely to make the human race extinct and where we should focus our attention just in order to stay alive.

What’s most likely to kill us? Tech. As in Sci-Fi scary tech. And the institute has set a metric — Maxipok, short for Maximize the Probability of an OK Outcome — to keep our tech from killing us off in the years to come.

Remember Skynet from The Terminator? In that movie, a worldwide defense network suddenly becomes self-aware and tries to wipe out humanity. Well, the scientists at the Future of Humanity Institute say they are concerned about a similar, real-life scenario. “Almost all the attention given to risks in general … have been given to smaller risks,” says lead researcher, Nick Bostrom. “You can make the case that existential risk reduction is the most-important task for humanity … that trumps anything else we can do including curing cancer or ending world hunger.”

He goes on:

In this century, I believe that humanity has a good chance of developing some profoundly transformative technologies, including advanced molecular nanotechnology, artificial intelligence exceeding human intelligence, advances in synthetic biology. And from each of these areas … there could be really major existential risks (to) the very future and survival of our species.”

That’s right. We’re more likely to off our own species through the tech we’ve built than from the danger we face from asteroids, gamma rays, super volcanoes, Avian Flu, alien invasion, nuclear wars, germ wars and global warming, according to the Oxford Future of Humanity Institute research paper. Find that paper embedded in full below the fold.

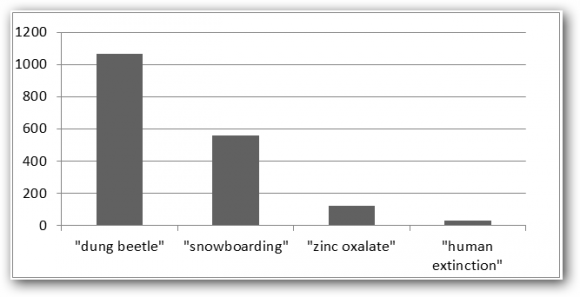

History shows that we have developed the skills needed to survive most catastrophes, but we’re virgins at saving ourselves from the stuff we’ve built, according to the scientists. Of course, we’ve known this was a possibility at least since Charlie Chaplin’s Modern Times and Fritz Lang’s Metropolis. What’s bizarre, according to the researchers, is that scientists have done more research on dung beetles, snowboarding and zinc oxalate than they have on human extinction. Here’s a bar chart from the Oxford research, below.

Image credit: Existential Risk Prevention as Global Priority, by Nick Bostrom, University of Oxford

It’s a sobering thought. On Tuesday, April 23, 2013, a phony tweet about a terrorism attack briefly obliterated $134 billion from the New York Stock Exchange. The tweet apparently got fed into automatic computerized trading systems that simply carried out the instructions we had previously given them.

Sure, a bogus tweet about a non-existent terrorism incident that briefly drops everyone’s 401K pension money isn’t Skynet. But what if the bogus tweet or, worse, some malware with a similar message, had gone to an autonomous defense system? What then? As the exchange in Stanley Kubrick’s 2001 went:

HAL, open the pod bay doors.”

“I’m sorry, Dave, I can’t do that.”

The Oxford scientists argue in their research paper, Existential Risk as Global Priority, that international policymakers must pay serious attention to the reality of species-obliterating risks, especially threats related to technology.

We have no track record of surviving threats originating from technology, the scientists argue. Likening our technology to a dangerous weapon in the hands of a child, researcher Bostrom says the advance of technology has overtaken our capacity to control the possible consequences.

These considerations suggest that the dramatic loss resulting from an existential catastrophe is so enormous that the objective of reducing existential risks should be a dominant consideration whenever we act out of an impersonal concern for humankind as a whole, according to the scientists. They argue that it’s useful to adopt the following rule of thumb for such impersonal moral action:

Maxipok

Maximize the probability of an ‘OK outcome,’ where an OK outcome is any outcome that avoids existential catastrophe.”

At best, maxipok is a rule of thumb or a prima facie suggestion. According to Bostrom:

It is not a principle of absolute validity, since there clearly are moral ends other than the prevention of existential catastrophe. The principle’s usefulness is as an aid to prioritization. Unrestricted altruism is not so common that we can afford to fritter it away on a plethora of feel-good projects of suboptimal efficacy. If benefiting humanity by increasing existential safety achieves expected good on a scale many orders of magnitude greater than that of alternative contributions, we would do well to focus on this most efficient philanthropy … note that maxipok differs from the popular maximin principle … choose the action that has the best worst-case outcome …

… (because) we cannot completely eliminate existential risk … at any moment, we might be tossed into the dustbin of cosmic history by the advancing front of a vacuum phase transition triggered in some remote galaxy a billion years ago — the use of maximin in the present context would entail choosing the action that has the greatest benefit under the assumption of impending extinction.

Maximin thus implies that we ought all to start partying as if there were no tomorrow. That implication, while perhaps tempting, is implausible.”

It’s not that implausible, when you think about it. But the institute makes a great case that human-extinction research is a barren area of inquiry.

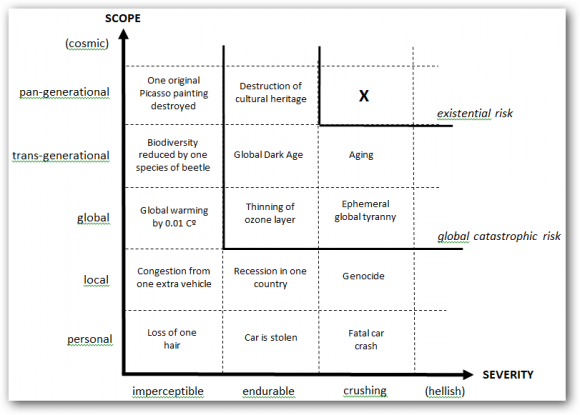

Here’s an analysis of risks the paper talks about.

We’re a plucky, optimistic species, apparently. But considering where tech is going and how fast it is moving, we definitely need to be a lot more cautious. Check out this video where Nick Bostrom from the Oxford University Future of Humanity Institute elaborates on the threats we face.

Source: ExistentialRisk.org

Here’s the paper in full, readable below the fold.

Existential Risk Prediction as Global Priority Paper

Credit for the Metropolis Movie Poster: Wikimedia Commons

Based in Silicon Valley and Tulsa, OK, Tom Ewing is a patent attorney who instructs other attorneys internationally via the United Nations World International Patent Organization, or WIPO, in Geneva. He is also a columnist following world issues for aNewDomain.net. Follow +Thomas on Google+ or email him at Tom@aNewDomain.net.